We propose a semantically-aware novel paradigm to perform image extrapolation that enables the addition of new object instances.

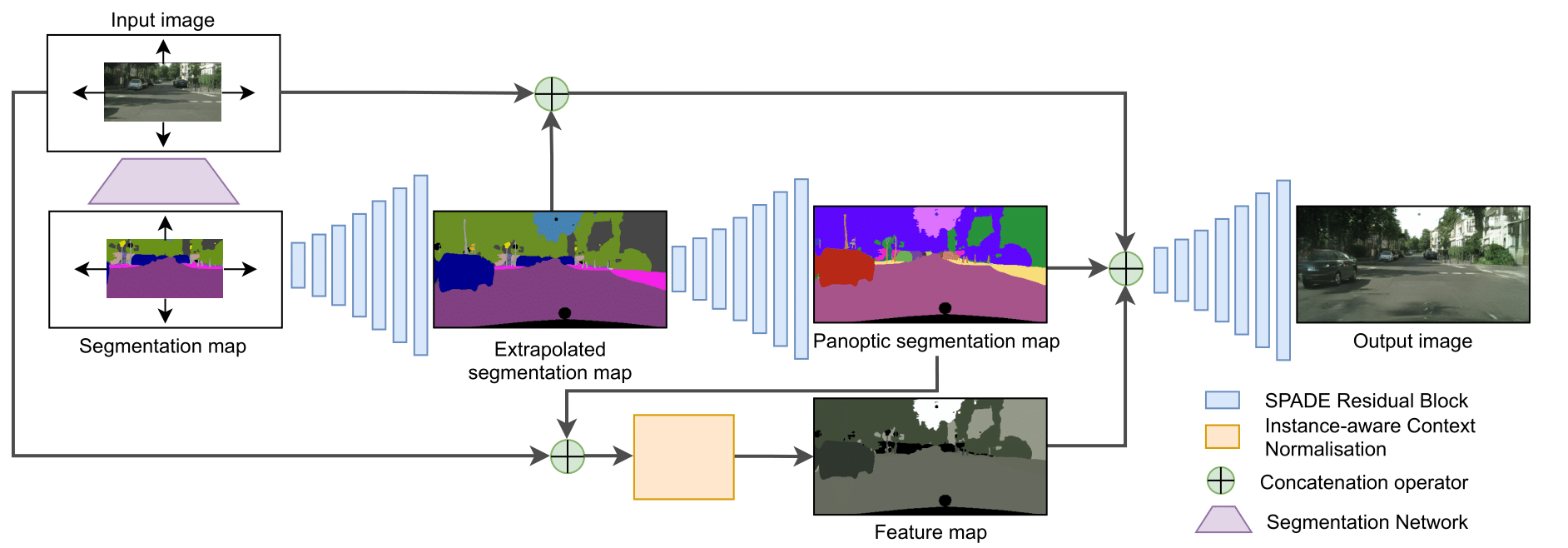

All previous methods are limited in their capability of extrapolation to merely extending the already existing objects in the image. However, our proposed approach focuses not only on (i)extending the already present objects but also on (ii)adding new objects in the extended region based on the context. To this end, for a given image, we first obtain an object segmentation map using a state-of-the-art semantic segmentation method. The, thus, obtained segmentation map is fed into a network to compute the extrapolated semantic segmentation and estimate the corresponding panoptic segmentation maps. The input image and the obtained segmentation maps are further utilized to generate the final extrapolated image.

We conduct experiments on Cityscapes and ADE20K-bedroom datasets and show that our method outperforms all baselines in terms of FID, and similarity in object co-occurrence statistics.

SemIE has ideally an infinite zooming out potential. We can zoom out an image upto a good extent by recursively passing it through our model.

SemIE outperforms existing methods on the cityscapes and the ADE20K-bedroom dataset by generation of realistic image extrapolation. Except SemIE and SPGNet, all other methods fail to generate new objects in the extrapolated region.

Stage 1: The input image is fed into a pre-trained segmentation network to obtain its label map. Stage 2: The stage 1 output fed into a network to obtain the extrapolated label map. Stage 3: The extrapolated label map is fed intoanother network to obtain the panoptic label map. Stage 4: The input image, extrapolated label map and the panoptic label map are used inconjunction with Instance-aware context normalization module to obtain the final extrapolated image.